How to Make Microsoft 365 Copilot Enterprise Ready From a Security and Risk Perspective

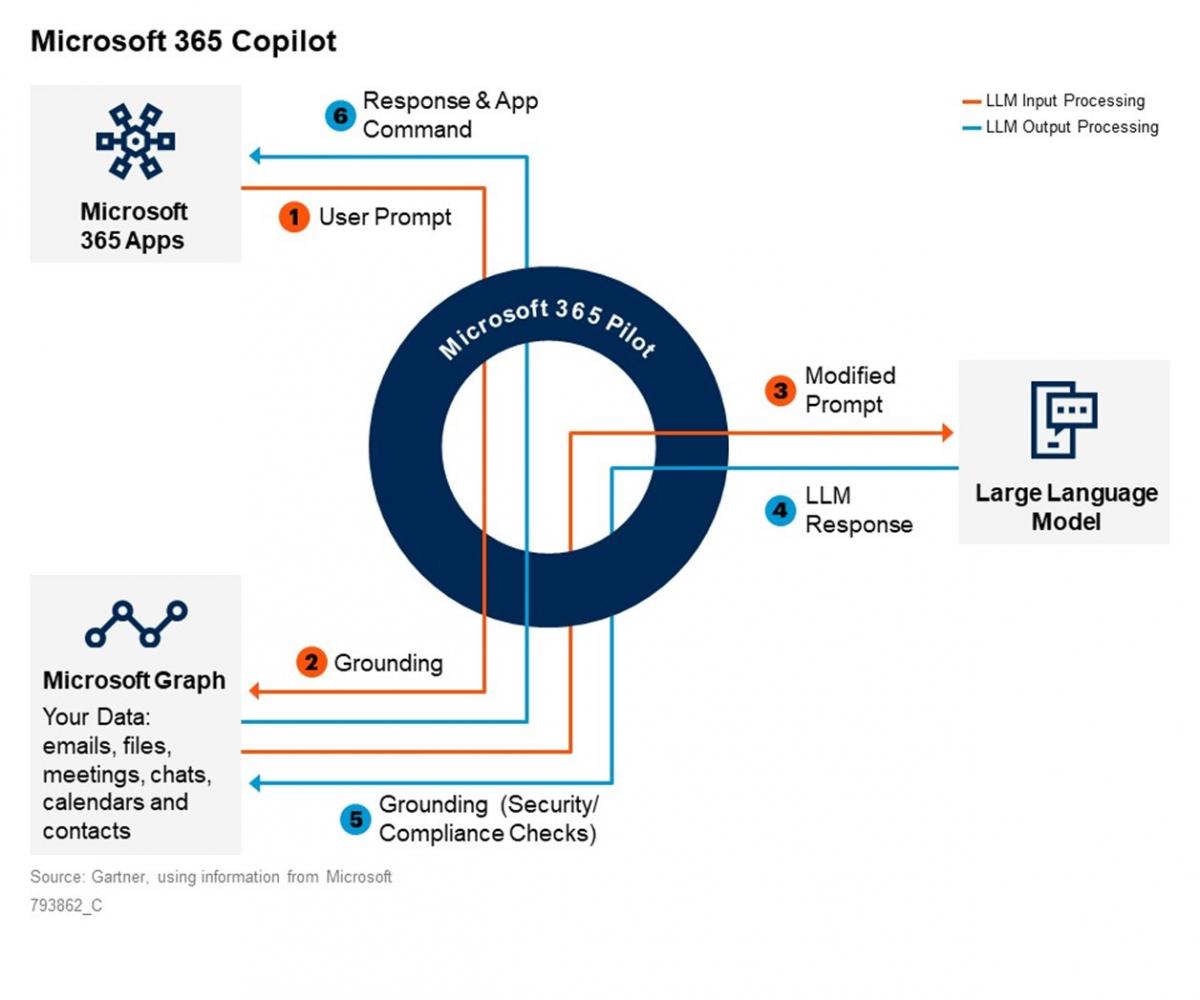

Microsoft 365 Copilot inherits all of Microsoft’s cloud security controls, but these were not designed for new AI capabilities. Security and risk management leaders must implement verifiable controls for AI data protection, privacy, and filtering of large language model content inputs and outputs.

We just published Quick Answer: How to Make Microsoft 365 Copilot Enterprise-Ready From a Security and Risk Perspective where my colleagues Matt Cain, Jeremy D’Hoinne, Nader Henein, and Dennis Xu and I explore this topic.

At the time of writing, Microsoft 365 Copilot is not, in Gartner’s view, fully “enterprise-ready” — at least not for enterprises operating in regulated industries or subject to privacy regulations such as the EU’s GDPR or forthcoming Artificial Intelligence Act.

Microsoft might, however, add more security and privacy controls to Copilot before it becomes generally available, because of its dealings with participants in its current preview program.

Our note includes recommendations for supporting Microsoft 365 Copilot readiness for enterprise use in terms of security and risk: (These recommendations can be applied to other enterprise applications that use third-party-hosted Large Language Models).

- Filtering Microsoft 365 Copilot’s inputs and outputs against enterprise specific policies. Such filtering is not included in native Azure content filtering but specialised tools from smaller vendors such as AIShield Guardian and Calypso AI Moderator are starting to feature input and output content-filtering capabilities between user prompts and LLM models.

- Verifying Microsoft’s data governance and protection assurances that confidential enterprise information transmitted to its large language model (LLM) - for example, in the form of stored prompts - is not compromised.

- The model itself is stateless, but confidential information can be retained in its prompt history (if customers do not use API interfaces or do not opt out of prompt retention), and potentially in other logging systems in the model’s environment. This creates vulnerabilities that bad actors might exploit; alternatively, LLM system administrators could simply make configuration mistakes, as has been reported with OpenAI’s LLM environment.

- In the meantime, customers must rely on Microsoft’s licensing agreements to define the rules governing shared responsibility for data protection. Once it is generally available, Copilot will come under the Microsoft 365 Product Terms.

- Addressing concerns about LLM model transparency to conduct the impact assessments required to comply with regulations such as the EU’s General Data Protection Regulation (GDPR) and upcoming Artificial Intelligence Act.

- Using third-party security products with Copilot for both non-AI-related and AI-specific security. This will become increasingly important as hackers begin to execute both direct and indirect prompt injection attacks.

Coincidentally, Microsoft CTO Kevin Scott was one of the signatories on the Center for AI Safety’s statement on AI Risk:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

Certainly, there are existential risks that come with new generations of AI. Those risks are well beyond the scope of this research, which instead addresses the risks of using what Matt Cain calls “Everyday AI”.